Testing is a core component of pay-per-click advertising. Regardless of strategy or features, proper testing is critical for determining what works and, importantly, what doesn’t.

But a challenge with PPC testing today is automation and machine learning. Say I have a Google Ads campaign set to maximize conversion bids strategy. Even with two ads running against each other where the only difference is the call-to-action, Google will automatically prefer one ad over the other. The bid strategy will show the ad that Google anticipates obtaining the most conversions even if one ad receives, say, only 20% of the impressions — despite the advertiser’s intention of each receiving 50%.

Fortunately, Google has introduced Experiments, in three types:

- Custom,

- Ad Variations,

- Video.

Custom Experiments

Custom experiments are the most common and are available for Search and Display campaigns (not Shopping). The experiment works by copying an existing campaign and implementing a change. For example, an advertiser could test a base campaign with an enhanced cost-per-click bid strategy against another with a maximize conversions bid strategy.

When setting up the experiment, choose the split of traffic. Fifty percent ensures the best comparison between the base and trial. Then choose to run a cookie-based or search-based experiment. I recommend cookie-based as it randomly assigns users to the original or the experiment and restricts the variation to only that variable. Search-based allows the user to see both the base and trial on any given search. Users who conduct multiple searches could see both variations, diluting the results.

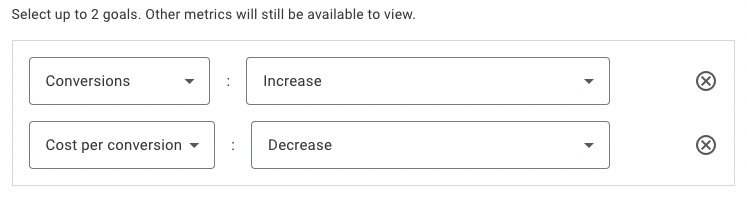

Custom experiments allow up to two goals. In the example below, I’m hoping for conversions to increase and the cost per conversion to decrease.

Custom experiments allow up to two goals. This example shows the goals of conversions to increase and the cost per conversion to decrease.

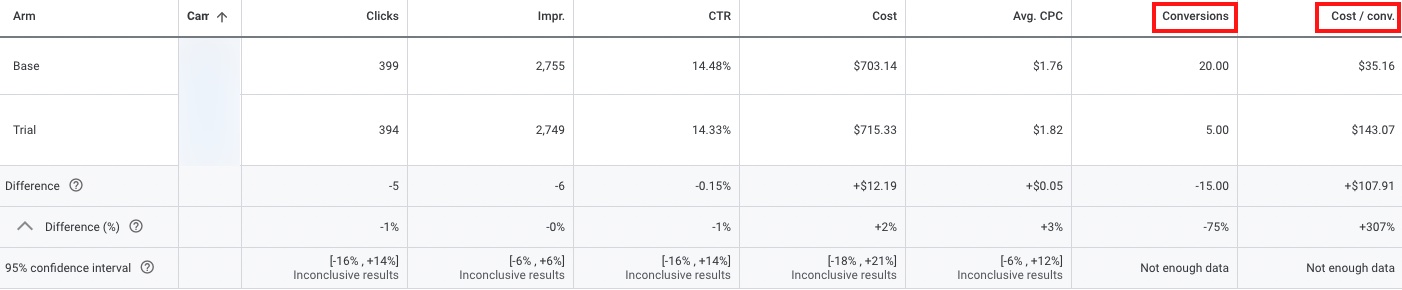

When viewing the experiment results, the dashboard shows the two test metrics — conversions (“Conversions”) and cost per conversion (“Cost/conv.”) — and others, such as clicks (“Clicks), impressions (“Impr.”), and others.

The dashboard for the experiment results shows the two test metrics (“Conversions” and “Cost/conv”) and others, such as clicks (“Clicks”), impressions (“Impr.”), and more.

Advertisers can run the experiment with an end date or indefinitely — and apply or cancel it at any time.

Other Custom experiments include:

- Conversion actions (for example, optimize for purchases versus email signups).

- Display creatives.

- Keyword match types.

Ad Variations

Ad testing was easier before Google’s Smart Bidding and Responsive Search Ads. Advertisers could set the ad rotation to “Do not optimize: Rotate ads indefinitely,” and Google would honor the request. That option is still available, but Smart Bidding largely ignores it. Responsive Search Ads allows for testing up to 15 headlines and four description lines simultaneously, often negating the need for multiple ads.

Testing landing pages or ads with a specific message is harder to execute without using Ad Variations. Ad Variations allow for 50-50 tests across ads in one or many campaigns that meet your criteria.

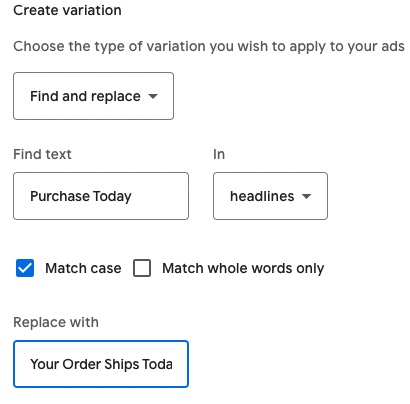

Say a call-to-action in an RSA is “Purchase Today,” and you want to test “Your Order Ships Today.” To test across all headlines, go to “Create variation” and select “Find and replace” in the initial drop-down menu.

Then:

- Insert “Purchase Today” in the “Find text” field,

- Choose “headlines” for the corresponding drop-down,

- Click “Match case,” and

- Type “Your Order Ships Today” in the “Replace with” field.

As with Custom experiments, you’ll see the performance change between the base and the trial. You can view the overall performance and the results by individual ads.

Unfortunately, Ad Variations does not allow testing pinned headlines or description lines unless the base ad already has a pin. Pinned assets ensure that a message will always show in the area an advertiser designates. If we set “Purchase Today” to pin in headline 2, the replacement message of “Your Order Ships Today” would also always show there. The text will change with unpinned assets, but we won’t know the headline or description line. Nonetheless, Ad Variations are worthwhile in my experience.

Other Ad Variations to test include:

- Landing pages,

- A copyright symbol on the brand name,

- URL paths.

Video Experiments

The rise of video ads necessitates testing. That’s the purpose of Video experiments, to determine what works best on YouTube. Video experiments require two base campaigns with the same settings but different videos. For example, a store advertising the latest running shoe could experiment with two videos. The first might include close-ups of the shoe, while the second video shows someone wearing it.

Video experiments allow up to four campaigns (videos) concurrently with two metrics to test: brand lift and conversions. Brand lift measures the effectiveness (i.e., brand recall) of the ads based on survey responses. It’s especially relevant to video campaigns, which don’t typically generate conversions. Video experiments, as with the others, provide results in real-time.