My first step in setting up a new AdWords campaign is to test the ad copy. AdWords has long recommended running three or more ads in each ad group. It’s an industry practice to test new messaging.

The most common protocol for testing ad copy in a single ad group uses the following pattern.

- Write two-to-three ad variations to start — it’s best if there is a basic hypothesis behind each ad.

- Allow enough time and data to accrue for a statistically significant winner to emerge.

- Pause underperforming ads to have one “champion.”

- Analyze why the champion won and why the underperformers did not.

- Write one-to-two new “challenger” ads — again, testing a hypothesis.

- Repeat the process.

The main benefit of a testing process is improved performance in key indicators, such as a higher click-through rate, a higher conversion rate, or a larger average purchase.

A solid testing regimen should keep KPIs progressing upward or at least keep them from decreasing. Constant growth is more-or-less impossible, especially in competitive niches where others can see your ad copy and imitate your efforts.

Another, often unrealized, benefit is the insight you receive about your target customers. If your ad test, for example, were set up to test the hypothesis that a dollar discount is more effective than a percentage, your results would provide a powerful advantage. If the hypothesis validates, you could start offering dollar-off discounts in your email and social media marketing, confident that it is more effective than a percent-off discount.

Automated Testing

I’ve long used Excel pivot tables to aggregate data and determine winning ad variations or even phrases. That process can become laborious. So it has become a target of various automation efforts.

AdWords itself has offered campaign settings that “optimize” ad rotation. While there used to be two options — optimize for clicks or conversions — there is now just one choice to “optimize.” (I addressed it in September, in “AdWords Changes Coming Fall 2017.”) This new “optimize” feature is AdWords’ poor-man version of automating ad testing.

And it appears AdWords is doubling down.

In a recent interview on Search Engine Land, Matt Lawson, director of performance ads marketing at Google, spoke with Nicolas Darveau-Garneau, Google’s chief search evangelist. Darveau-Garneau offered a few telling comments about ad testing, including this one:

The faster people realize that ad testing is a thing of the past, the better off they’ll be. Optimize your ad rotation, enable as many extensions as you can, and add a bunch of ads to your ad groups. Using optimized rotation uses the most appealing ad at the time of each auction, for each individual customer.

Intuition Required

Relying on Google to choose the best ad creates a potential conflict, as Google presumably wants to maximize its ad revenue. I’ve seen many examples of Google optimizing to a subpar result for the advertiser.

So what is an advertiser to do? Certainly do not try to outmuscle optimization algorithms. Let the algorithms work, but in more controlled and narrow ways.

There are numerous automated tools for pay-per-click management. Most have at least some functionality for ad testing. I use Adalysis, but there are many others. (I’m not an affiliate or an investor in Adalysis, just a customer.)

I mainly use Adalysis to confirm my intuition on ad copy — especially on analyzing the “why” behind a winning ad — and to automate the testing part. The tool looks at the copy in each ad group and notifies me when a test has reached statistical significance.

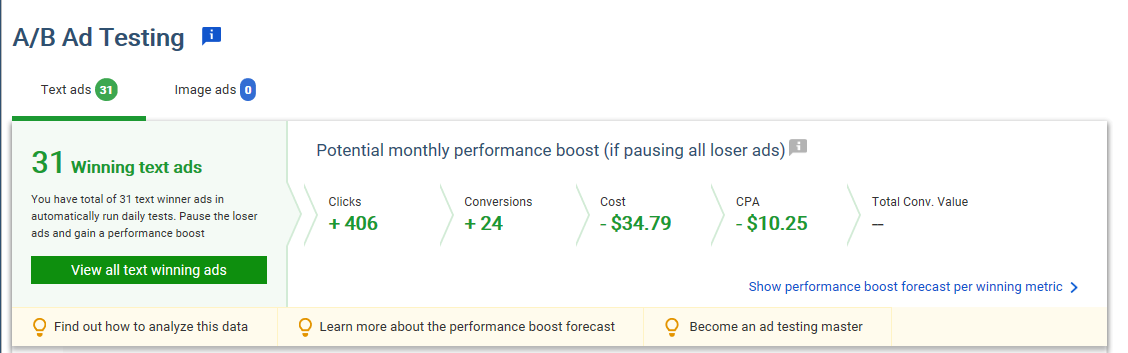

Adalysis looks at the copy in each ad group and sends notifications when a test has reached statistical significance. Click image to enlarge.

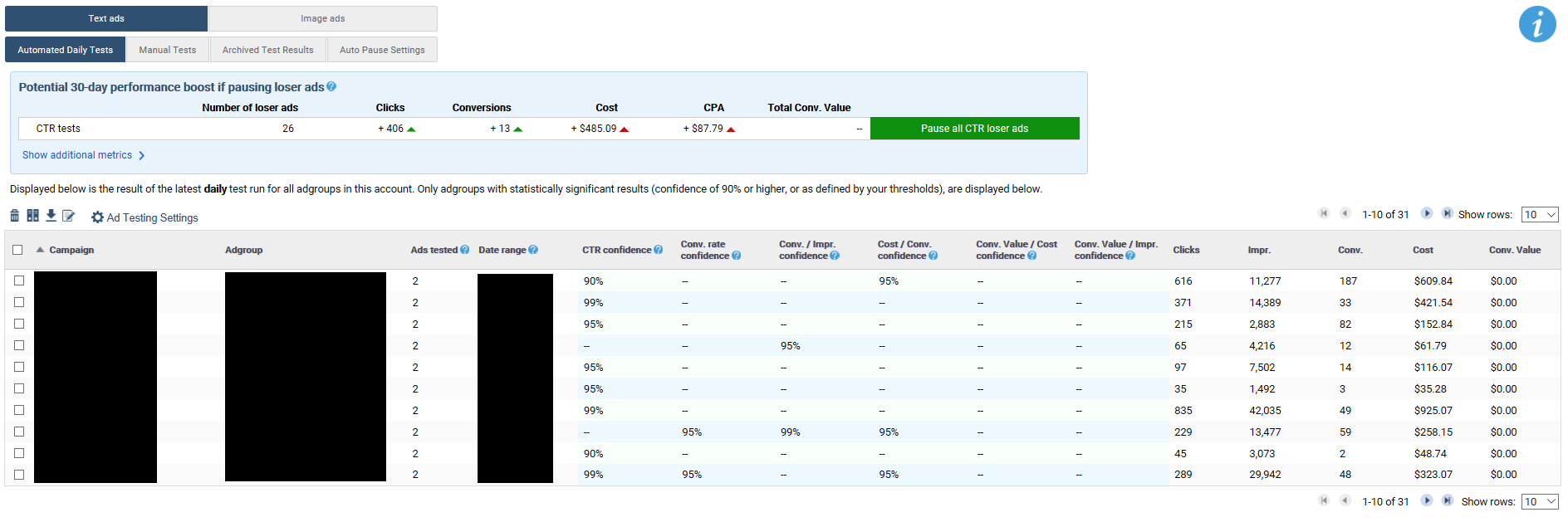

After producing a list of ad groups with winning tests, Adalysis provides confidence levels for various metrics, such as click-through rate, conversion rate, and conversions per impression. It also shows the data behind each metric.

After producing a list of ad groups with winning tests, Adalysis provides confidence levels for various metrics. Click image to enlarge.

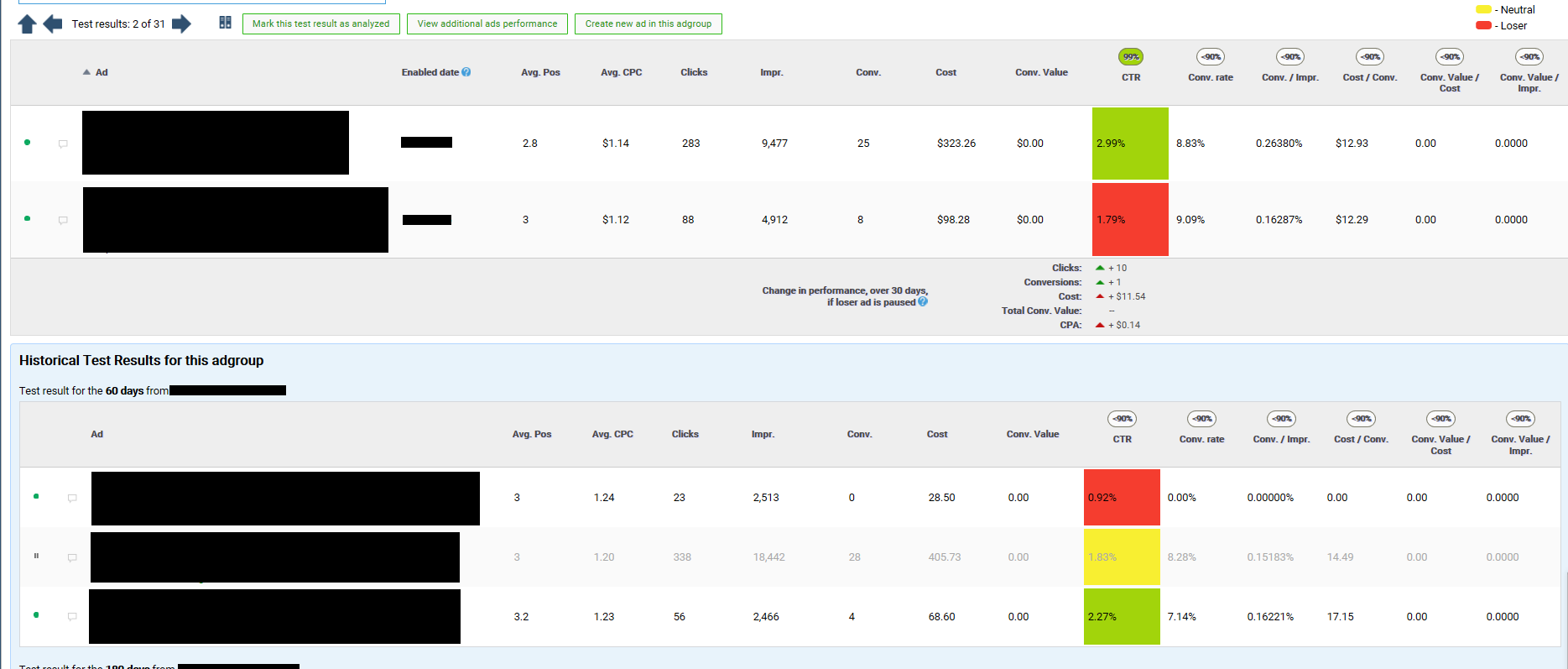

Depending on the preferred metric, you can quickly determine the tests to act on. Clicking on a specific ad group shows you which ad is winning and also includes your history of ad tests, so you don’t repeat them.

Clicking on a specific ad group shows you which ad is winning and also includes your history of ad tests, so you don’t repeat them. Click image to enlarge.

Using Adalysis in this manner constantly monitors for winners. I just insert myself for intuition. You could use other tools or do it manually with Excel and reports. The tool isn’t as important as the principles behind it.

Human Element

For many advertisers, it’s too early to rely on Google for ad testing. A testing program requires intuition, even if Google has enough data to work with, which is not always the case. Keep the human element in the process, but use tools such as Adalysis to improve efficiency.