Matt Cutts

Google’s head spam cop Matt Cutts announced the impending launch of a new over-optimization penalty to “level the playing ground.” The disclosure came earlier this month at the South By Southwest (SXSW) conference in Austin, Texas during an open panel — entitled “Dear Google & Bing: Help Me Rank Better!” — with Google’s and Bing’s webmaster and web spam representatives. Google’s goal for the penalty is to give sites that have produced great content a better chance to rank and drive organic search traffic and conversions.

Pretty much all site owners can point to the search results for their dearest trophy phrase and point out at least one site that just shouldn’t be allowed to rank. Competitive ire aside, sometimes sites have poor content but focus extra hard on their search engine optimization efforts. These sites are easy to spot. They usually have a keyword domain, lots of keyword-rich internal linking, and heavily optimized title tags and body content. Their link portfolios will be heavily optimized as well. But their content is weak, their value proposition is low, they’re obviously — to human observers — only ranking because of their SEO. The upcoming over-optimization penalty would theoretically change the playing field so that sites with great content and higher user value rank above sites with excessive SEO.

What Qualifies as Over-Optimization?

No one but Google knows what, exactly, is “over-optimization.” However, Cutts did mention that Google is looking at sites by “people who sort of abuse it whether they throw too many keywords on the page, or whether they exchange way too many links, or whatever they’re doing to sort of go beyond what a normal person would expect in a particular area.” It’s widely believed that keyword stuffing and link exchanges are already spam signals in Google’s algorithm, so either Google intends to ratchet up the amount of penalty or dampening that those spam signals merit algorithmically or they have new over-optimization signals in mind as well.

5 Signals that Should Qualify as Over-Optimization

Because I can’t believe that the bits Cutts references are all there is to the over-optimization algorithm update, I’ve been daydreaming about what I would classify as over-optimization. Keep in mind that I have no inside knowledge as to what they’re planning. In other words, don’t run out and change all these things just because you read this article. But these tactics are on my list because they leave a bad taste in my mouth when I come across them and I sure hope they’re on Cutts’ list as well.

- Linking to a page from that same page with optimized anchor text. If the page is www.jillsfakesite.com/flannel-shirts, and in the body copy of that page I link the words “flannel shirts” to the same page the words are on, IE www.jillsfakesite.com/flannel-shirts, that should count as over-optimization.

- Linking repeatedly from body copy to a handful of key pages with optimized anchor text. If 33 of my 100 pages link to www.jillsfakesite.com from the body copy with the anchor text “Jills Fake Site,” that should count as over-optimization.

- Changing the “Home” anchor text to your most valuable keyword. Usually the home link is the site’s logo. But in the cases where the home link is textual and has been optimized with the juiciest keyword, that should count as over-optimization.

- Overly consistent and highly optimized anchor text on backlinks. If 10 of the 100 links to a page contain the same highly optimized anchor text, such as “Jill’s Fake Site, the Fakest Site Selling Flannel Shirts on the Web,” that should count as over-optimization.

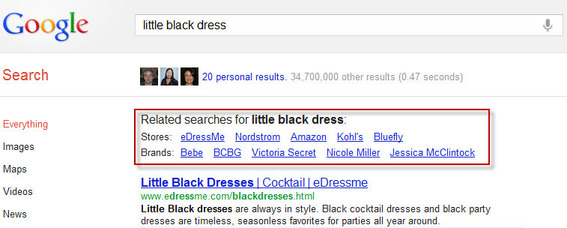

- Generic keyword domain name. They have way too much impact on rankings, and need to be demoted in importance. Now I’m sure it’s difficult to determine which words are generic and which are brands. But Google seems to have cracked that nut at least partially with its related brands results. Surely they must be close to understanding the difference between the non-branded domain littleblackdress.com and the brand whitehouseblackmarket.com.

Google’s related stores and brands search results for “little black dress”

So there you have it, my five least favorite over-optimization tactics, all of which I hope become algorithmic spam signals. Cutts’ transcribed comments on the penalty are below, but it’s worth going to the “Dear Google & Bing: Help Me Rank Better!” session page to listen to the entire recording. You’ll find the transcribed tidbit 16:09 into the hour-long audio clip.

According to Matt Cutts, “Normally we don’t preannounce changes, but there is something we’ve been working on in the last few months and hopefully in the next couple of months or so, you know, in the coming weeks, we hope to release it. And the idea basically is to try to level the playing ground a little bit. So all of those people who have sort of been doing, for lack of a better word, over-optimization or overly doing their SEO, compared to the people who are just making great content and trying to make a fantastic site, we want to sort of make that playing field a little bit more level. And so that’s the sort of thing where we try to make the website, the Googlebot smarter, we try to make our relevance more adaptive so that if people don’t do SEO we handle that, and then we also start to look at the people who sort of abuse it whether they throw too many keywords on the page, or whether they exchange way too many links, or whatever they’re doing to sort go beyond what a normal person would expect in a particular area. And so that is something where we continue to pay attention and we continue to work on it, and it is an active area where we’ve got several engineers on my team working on that right now.”