A web page must reside in Google’s index before it can appear in organic search results. A page can rank without Google crawling it but never without being indexed.

Thus monitoring a website’s indexation is critical.

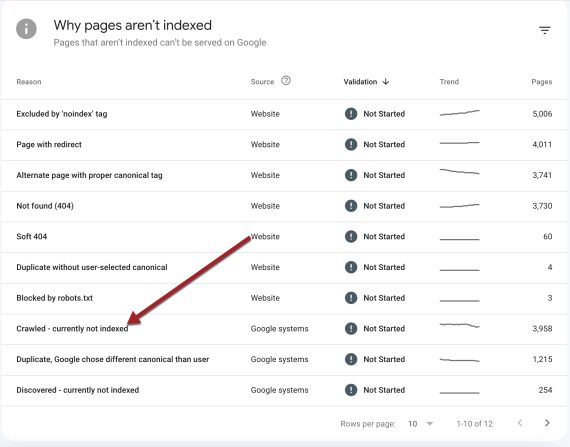

Google’s Search Console helpfully provides a site’s index status at Indexing > Pages. The section contains a “Why pages aren’t indexed” list with a rundown of “Crawled – currently not indexed.”

It can be confusing. Why would Google crawl a page but not index it? Is the page inferior in some way?

No. Failure to index is not necessarily a signal of poor quality.

Indexing 101

Google doesn’t always immediately apply algorithmic scores to pages it crawls, especially with newer sites. It sometimes gathers data first, then indexes.

Thus it’s not a quality issue as much as timing.

A low-ranking page is a better indicator of poor quality since Google presumably has data for its algorithm.

Certainly Google can remove a page from its index. That’s the other reason to monitor Search Console’s “Crawled – currently not indexed” list. A site with no new pages but a growing number in the “Crawled – currently not indexed” list has a problem.

For example, widespread deindexing occurred shortly after a core update in 2020. Google’s Gary Illyes confirmed that the pages were removed because of “low-quality and spammy content.”

In my experience, failure to index is common for sites with a half-million or more URLs for two reasons.

- Too big. The site has too many pages for Google to index. Google doesn’t have indexation maximums, but it does have crawl limitations. Thus a monster site could have both superior quality and spotty indexing.

- Poor visibility. The site has many pages multiple clicks away from the home page or with few internal backlinks. I’ve seen sites where half the pages have just one or two internal backlinks. This signals to Google that those pages are unimportant.

Yet deindexing needs immediate attention if it’s getting worse or includes 25% or more pages. The former typically results from core or helpful content algorithm updates. The latter is likely owing to poor site structure that buries pages, prompting Google to devalue them.