Website outages are disruptive enough to business without having to worry about your natural search performance. How you handle server outages and site downtime can impact the length and severity of the impact to natural search.

When a site goes down, the server sends a 500-series error to indicate that the server is temporarily unavailable and unable to fulfill the browser’s request. This is commonly displayed — in stark terms, by default — as either a 500 or 503 error code to shoppers, as shown below.

A default 503 error page.

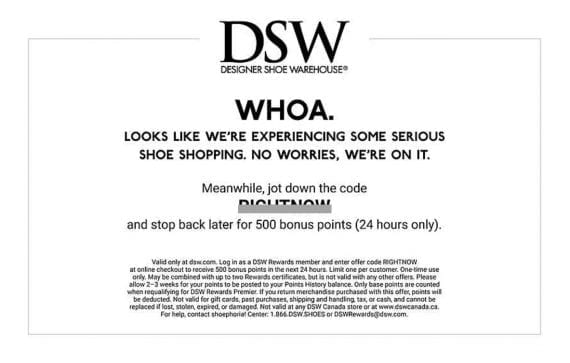

Unlike the more common 404 error page that signals a single missing file or page has been requested by the browser, the 500 series of errors indicates that the server itself is experiencing some trouble and can’t fulfill the request to serve the web page. Like the 404 error page, the 500 error page can be customized to be more friendly, such as displaying a sense of humor or offering discounts to encourage visitors to return.

Everyone experiences outages, even the biggest names in ecommerce and around the Internet.

Server error message on Target.com shown during 2015 Cyber Monday

DSW’s server error page shown during a recent redesign encouraged shoppers to return by offering a discount.

For search engine optimization, a server outage can mean a drop in rankings, which is typically followed by a drop in traffic and sales. The search engines do not want to risk a poor user experience in sending searchers to sites that are down. However, for more popular sites, they also don’t want to entirely stop ranking sites experiencing short outages because that would cause questions from searchers using the search engine as a navigational tool to get to a specific site.

Typically, the search queries impacted the hardest are nonbranded queries like “toys” as opposed to branded queries like “walmart toys” that indicate a desire for a specific site. If your natural search traffic is driven primarily by branded searches, you may not see much of a performance wobble. However, if your site is earning traffic and sales from highly valuable nonbranded queries, the performance wobble could be more significant as the search engines reroute searchers to sites that are up.

The length of time for which your performance would be impacted varies from a day or two to several weeks depending on the length of the server downtime.

If the outage is short and the search engines don’t come crawling before the server is back up, the search engines might not even see the outage. Remember that they are not omniscient and do not share data among each other. Only issues that get crawled will directly impact natural search performance, and only for the engine that does the crawling.

In other words, if your site goes down at 10 a.m. and comes back up at 10:30 a.m., and if Googlebot doesn’t crawl your site until 10:31 a.m., then Google has no way of knowing that the site was down. In addition, Bing’s crawl does not inform Google that is not an issue. So Bingbot could crawl during the outage and notice the issue, but if Googlebot doesn’t crawl until after the issue is resolved, then Google performance won’t be impacted.

Even if the search engine’s bot crawls in time to notice a 500 series error, the issue will only impact natural search until the next time the bot crawls and sees that the site is back up.

Google engineer John Mueller characterized the impact this way: “My guess is something like this where if you have to take the server down for a day, you might see maybe a week, two weeks’, at the most maybe three weeks’ time where things are kind of in flux and settling down again, but it shouldn’t take much longer than that.”

There is no “penalty” as such for having a site outage. The decrease in natural search performance should last as long as the outage, plus a little time for the site to get re-crawled and for the engine to regain confidence in its stability. Because search engines crawl pages that are more valuable to their rankings more frequently, those pages will be the first on the site to be rediscovered as active.

Downtime happens on every site, it’s a fact of life. However, if a site is down frequently or for long periods of time when bots crawl, it can reduce that search engine’s confidence in the site’s value to searchers and impact performance. For longer outage periods, bots will begin to reduce their crawl frequency or even de-index a site. When the site is discovered to be back in service, however, it will get re-indexed with the information on its previous relevance and authority intact, at least in Google, according to Mueller.

When a site goes down, make sure your development team has your 500 series error pages ready to put into service. The server header status code served to the search engine on each page when the site is down matters because it tells search engines that the server is unavailable and in essence asks them to check back. It also prevents the error page itself from being indexed instead of the ecommerce content on your site.

If the outage is planned, such as during the launch of a redesign or a mass product refresh, indicate the expected length of the outage by using a “retry after” HTTP header. This tells search engines how long the site is expected to be down.