Testing is fundamental to proper conversion rate optimization. Done well, an A/B test can help ecommerce marketers identify the best landing page design, which form of navigation works best, or even how and where to position page elements.

Unfortunately, there are at least three relatively common A/B test mistakes that will lead to inaccurate results and, perhaps, poor decisions. Avoid these mistakes and your A/B tests will work better.

1. Random Fluctuation

Statistical fluctuation, which is sometimes called random fluctuation, describes random changes in a set of data that are not related to the measured stimulus.

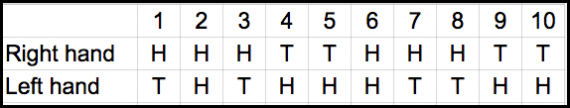

The classic example of random fluctuation is a coin toss. Many large studies (and common sense) show that in a fair coin toss or series of fair coin tosses, heads should come up about half of the time. Or, more precisely, heads will appear at a ratio of 1/2. Similarly, tails will land up about half of the time.

If you don’t think this is true, try flipping a fair coin about 100,000 times, recording the result. You will find that about 50,000 will be heads.

But in smaller tests, one can get dramatically different results. As an example, consider the result of 10 right-handed coin tosses versus 10 left-handed coin tosses done specifically for this article. In both tests heads actually came up 60 percent of the time. So if these were the only tests one conducted, the data might lead you to say that heads will come up 60 percent of the time, which is false.

In this example the sample size is too small, so that one could be mislead into thinking that heads comes up 60 percent of the time.

This can happen in A/B testing too. Imagine a marketer who A/B tests a change to a landing page. He gets a sample of 500 people and determines that the change improves conversions 20 percent. Would that be correct? Could you get a similar result even if there was no difference in the real difference on the things being compared? The answer is probably yes, you could get a 20 percent random fluctuation.

So how do you avoid making a mistake like this one? While there are a couple of ways to go about it, you might want to simply try increasing the sample size. Run the A/B test until an appropriately significant number of folks have participated in each branch.

Evan Miller, who wrote about this particular problem with A/B testing back in 2010, has a helpful tool for estimating the sample size needed for each branch of an A/B test in order to avoid simply measuring a random fluctuation

2. Not Examining the Data for Unexpected Differences

When a marketer uses A/B testing for conversion rate optimization, often the only thing that is examined is, well, the conversion rate. But this too can lead to poor results.

Imagine an online retailer who has just spent a lot of money to create a mobile-optimized version of its ecommerce site. Before completely launching the mobile version, the retailer conducts an A/B test, ensuring that each branch of the test (A and B) will have a sufficient number of data points (users). Alternating site visitors are shown either the old site or the new mobile-optimized site.

When the test is complete, the data shows that the mobile-optimized version actually reduced conversions by six percent. If the retailer stopped here, it might never launch the mobile-optimized version of its site, concerned that its sales would drop significantly.

But upon further review, there was a fatal flaw in the test. There was no consideration given to the type of device site visitors were using. So as a result, it is possible that the majority of mobile visitors, who would have actually benefited from the mobile-optimization were shown the old version of the site. A better setup would have been to evenly distribute desktop and mobile uses so that about half of the mobile users saw version A and about half saw version B.

To avoid this sort of error, be careful to examine all of the available data and not simply the conversion rate.

3. Testing Too Many Variables

One of the most common A/B tests an ecommerce business might conduct will have to do with a site redesign. A merchant could seek to determine is a completely new and improved checkout works better than the old (and completely different) checkout.

This sort of test, conducted well, can give some good insights. But if the pages being compared are radically different, the test might be trying to measure too many variables.

Try to set up A/B tests so that they measure or test a small set of significant differences in two or more pages. For example, given the same graphic design, does promotional text A convert better than promotional text B? Or given identical promotional copy and layout, does graphic design A convert better than graphic design B?

Be careful not to take this one too far, or you can waste a lot of time testing insignificant things. It probably doesn’t matter if the headline font is 16 points or 18.