A/B testing is a method for measuring the relative effectiveness of certain page content or marketing promotions. When properly applied, A/B testing may help marketers significantly improve conversion rates and establish a culture of improvement.

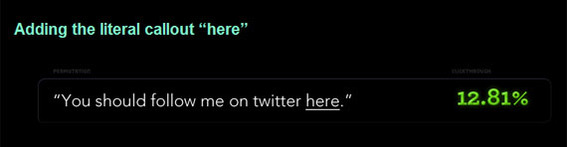

In 2009, blogger Dustin Curtis conducted an experiment on his site. At the bottom of each blog post, he included a link that read, “I’m on Twitter.” The link converted about 4.7 percent of the time. Curtis changed the link to read, “Follow me on Twitter,” and found that the conversion rate rose to some 7.31 percent. Eventually, the anchor text — the portion of the link that is publicly visible — was changed to “You should follow me on Twitter here,” which converted at a rate of 12.81 percent.

Dustin Curtis tested anchor text on his blog to improve conversion rates.

In a strict sense, Curtis’ experiment could have been improved. For example the various forms of the anchor text should have been tested simultaneously rather than consecutively. But in general, it demonstrates the process of improvement that A/B testing may lead to.

Separately, ecommerce platform maker, Elastic Path conducted an A/B test on the Official 2010 Vancouver Olympics Store website. At random, shoppers were conducted to either a standard multi-page checkout or to a single page checkout to determine whether or not the checkout process itself could affect shopping cart abandonment rates. After 606 transactions, the single-page checkout was converting at a rate 21.8 percent better than the multi-page checkout.

What is A/B Testing?

In its simplest form, A/B testing compares the performance of a single variation in some form of marketing content. It looks at “A” and “B” to learn which converts better.

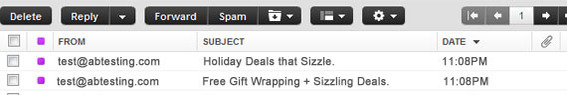

An example may help make the method a bit more clear. Imagine an ecommerce marketer who wants to send out an email promotion. This marketer wishes to maximize the number of emails that are opened. So two potential subject lines are proposed. First is (A) “Holiday Deals that Sizzle.” The second is (B) “Free Gift Wrapping + Sizzling Deals.”

Compare email subject lines to determine which will earn a better open rate.

The marketer in this email has a list of some 40,000 email recipients who have opted to receive promotional material. But rather than immediately sending the email to everyone, the marketer randomly selects a significantly significant sample, perhaps, 2,000 folks. The first 1,000 email recipients are sent the promotion using subject A. Simultaneously, the second 1,000 email recipients are sent the promotion using subject B. The marketer monitors the results for 24 hours.

At the end of the sample period, the marketer compares the open rates for email recipients that received subject line A with the open rates for those who received subject line B. That is an A/B test.

Using A/B Testing

Using A/B testing may start with something as simple as the email subject line example just described. But ultimately, the method should evolve into a culture of improvement.

For example, if the marketer testing subject lines stopped after a single test and assumed that adding the word “Free” to a subject line would always boost open rates, there could be lots of missed opportunities. There may be other subject lines that would work even better. Other factors could come into play too.

So a marketer should consider making A/B testing a habit, using it on email subject lines, product descriptions, “buy now” buttons — Amazon is famous for constantly A/B testing its buy now and add to cart buttons — or any site content or promotion that is important to the marketer’s business.

Software-as-a-service company 37Signals, as another example, posted the results on its blog of a series of A/B tests that it conducted in 2009. Specifically, the company found that improvements to the signup-page headline, which was also its primary call to action, resulted in a 30-percent improvement in conversion rates.

It is also important to be patient with A/B testing. In some cases, a marketer may need to test for a longer period of time — perhaps weeks for something like “buy now” buttons or days or pay-per-click ad landing pages.

And finally, the test should be set up so that both the “A” and “B” variation are tested at the same time and in such a way that individual visitors only see a single variation, even on repeat visits.

There are several A/B testing tools that will help with the testing process, including Google’s Website Optimizer, Visual Website Optimizer, and Vertster just to name three. Visual Website Optimizer also has a nice calculator for determining how significant a finding may be.

Building the Culture of Improvement

When A/B testing becomes a normal part of a company’s marketing process, a culture of improvement is born. In this culture, there is a drive to constantly improve and a willingness to change in response to data.