Generative AI platforms such as ChatGPT, Perplexity, and Claude now execute live web searches with all prompts. Ensuring a site is crawlable by AI bots is therefore essential for mentions and citations on those platforms.

Here’s how to optimize a website for AI crawlers.

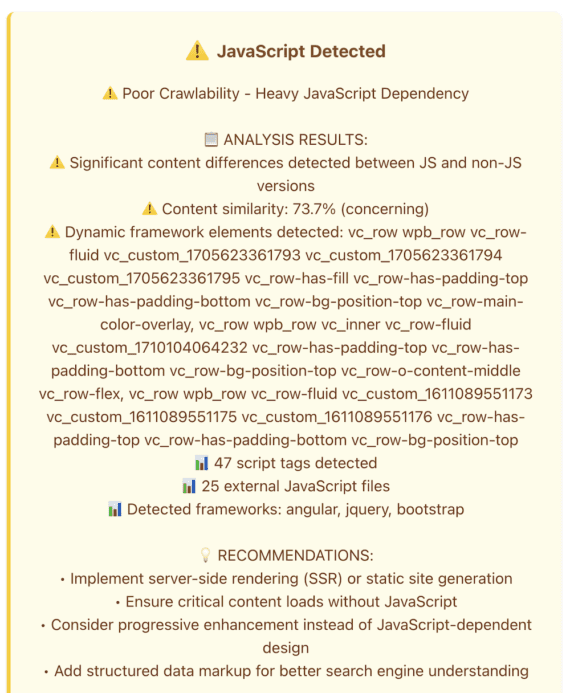

Disable JavaScript

Make sure your pages are readable with JavaScript disabled.

Unlike Google’s crawler, AI bots are immature. Many tests from industry practitioners confirm AI crawlers cannot always render JavaScript.

Most publishers and businesses no longer worry about JavaScript crawlability since Google has rendered those pages for years. Hence there’s a huge number of JavaScript-heavy sites.

The Chrome browser can render a site without JavaScript. To activate:

- Go to your site using Chrome.

- Open Web Developer tools at View > Developer > Developer Tools.

- Click Settings (behind the gear icon) on the right side of the panel.

- Scroll down and check the option “Disable JavaScript” under “Debugger.”

Now browse your site, making sure:

- All essential content is visible, especially behind tabs and drop-down menus.

- The navigation menu and other links are clickable.

- For video embeds, there’s an option to click to the original video, access a transcript, or both.

You can use Aiso, an AI optimization platform, to ensure AI bots can access and crawl your site. With a free trial, the platform allows a few free checks. Go to the “Website crawlability” section and enter your URL.

The tool will conduct a thorough review with suggestions on improving access for AI crawlers and even show the appearance of pages with JavaScript disabled.

Ensure AI Access

Make sure your site allows access for AI bots. Some content management platforms and plugins disallow AI access by default — site owners are often unaware.

To check, review your robots.txt file at [yoursite.com]/robots.txt.

The AI platforms themselves can interpret the file to ensure it allows access. Paste your robots.text URL into a ChatGPT prompt, for example, and request an analysis.

Structured Data

Structured data markup, such as from Schema.org, can also help ensure visibility.

Schema markup makes it easier for AI bots to extract essential information from a page (or bypass a block) without crawling it in full.

For example, many website FAQ sections have collapsible elements that prevent access to AI bots. Schema’s FAQPage Type replicates all questions and answers, enabling bot visibility.

Similarly, Schema’s Article Type can communicate context and authorship of content.